All published articles of this journal are available on ScienceDirect.

High-Occupancy Vehicle Lane Enforcement System

Abstract

Introduction:

An automatic High-Occupancy Vehicle (HOV) lane enforcement system is developed and evaluated. Current manual enforcement practices by the police bring about safety concerns and unnecessary traffic delays. Only vehicles with more than five passengers are permitted to use HOV lanes on freeways in Korea. Hence, detecting the number of passengers in HOVs is a core element for their development.

Methods:

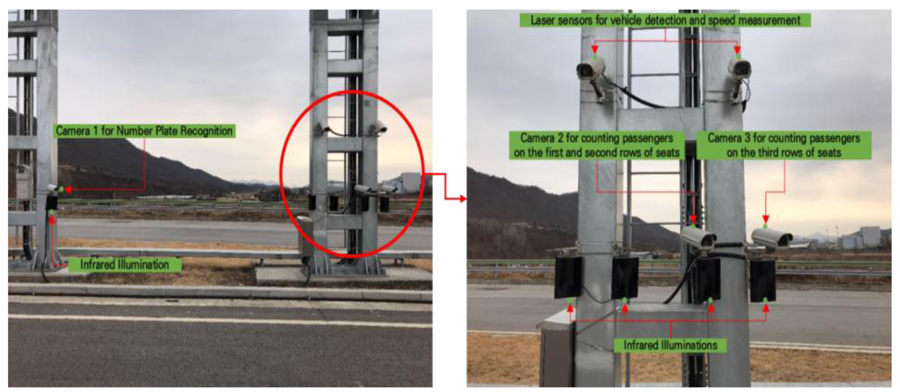

For a quick detection capability, a YOLO-based passenger detection model was built. The system comprises three infrared cameras: two are for compartment detection and the other is for number plate recognition. Multiple infrared illuminations with the same frequency as the cameras and laser sensors for vehicle detection and speed measurement are also employed.

Results:

The performance of the developed system is evaluated with real-world data collected on proving ground. As a result, it showed a passenger detection error of nine percent on average. The performances revealed no difference in vehicle speeds and the number of passengers according to ANOVA tests.

Conclusion:

Using the developed system, more efficient and safer HOV lane enforcement practices can be made.

1. INTRODUCTION

Many countries operate High-Occupancy Vehicle (HOV) lanes to increase passenger throughput by encouraging car-pooling or the use of public transit. It also curbs the use of passenger cars and results in overall delays on freeways being reduced. According to a report [1], HOV lanes in Korea increase the travel speed and passenger throughput by 29% and 4.5%, respectively. Also, overall delays on the road are reduced by 29%. Hence, approximately 180 lane-km of freeways are being operated as HOV lanes in Korea. Also, HOV lanes in North America have grown to over 6,000 lane-km of freeways as of 2010 and the trend is on the rise [2]. Many European countries also have HOV lanes in operation, enabling faster and more reliable trips than non-HOVs [3].

However, the desirable effects of HOV lanes can be diminished by non-HOVs infringing HOV lanes. To prevent this violation, the police cyclically patrol stretches of these lanes. However, this manual enforcement by the police, as shown in Fig. (1), can cause safety concerns and unnecessary delays when pulling violators over to the shoulder lane. A report [4] from the United States argued that manual HOV lane enforcement can crack down on no more than 10% of violations. A poll [5] performed in Korea revealed that around 60% of the respondents wanted the authorities to keep non-HOVs from entering HOV lanes.

In this regard, an automatic HOV lane enforcement system is required to supplement manual enforcement. The core technological challenge for an HOV lane enforcement system is to count the passengers in a car regardless of the illumination and tinted windows. Pavlidis et al. [6-9] developed a vehicle occupant counting system based on near-infrared phenome- nology applied by a fuzzy neural classification technique. Roulland [10] presented an infrared camera-based vehicle passenger detection system for HOV lane enforcement. Hao et al. [11] invented an occupant detection system through near-infrared imaging applied by Hough transform and the AdaBoost algorithm. Nikolaos [12] evaluated the capability of infrared spectroscopy to count occupants in a vehicle for automatic HOV lane enforcement. All these systems only detect passengers in the front row of seats to identify whether vehicles are carrying more than one passenger. However, under the Korean regulations, detecting up to six passengers is required for the HOV lane enforcement system, indicating the need to develop a new system with enhanced performance.

The recent advent of deep learning algorithms can enhance the passenger counting capability of the HOV lane enforcement system. An infrared-based HOV lane enforcement system applied by a deep learning algorithm is developed and evaluated in this study. Unlike other countries, including the United States, where vehicles with more than one passenger are permitted to enter HOV lanes, only vehicles with more than five passengers including the driver are allowed to enter HOV lanes in freeways under the Road Traffic Act of South Korea. Hence, the capability to count up to six occupants in a vehicle should be accomplished for an HOV lane enforcement system in Korea. The developed system can be applied to a core field device for the HOV lane enforcement procedure, as illustrated in Fig. (2).

2. SYSTEM DEVELOPMENT

2.1. Passenger Detection Model

Considering the high speed of vehicles on the freeway, a quick detection capability is of paramount importance for a reliable HOV lane enforcement system. Hence, the You Only Look Once (YOLO)-based passenger detection model was established. The YOLO algorithm creates spatially separated bounding boxes and related class probabilities for object detection. It models object detection as a regression problem. It grids the target image and then bounding boxes, confidence for the boxes, and class probabilities are predicted for each grid cell [13].

Unlike other convolutional neural network-based object detection algorithms, it utilizes a single neural network to predict bounding boxes and class probabilities directly on the entire image in one assessment. Therefore, it can be optimized end-to-end directly on detection performance due to the fact that the whole detection pipeline comprises a single network [14]. All these characteristics make YOLO widely recognized for quick object detection. Among YOLO algorithms, YOLOv3 [15], an improvement of the initial YOLO algorithm in terms of methods for bounding box prediction, class prediction, and feature extractor, was chosen for this study.

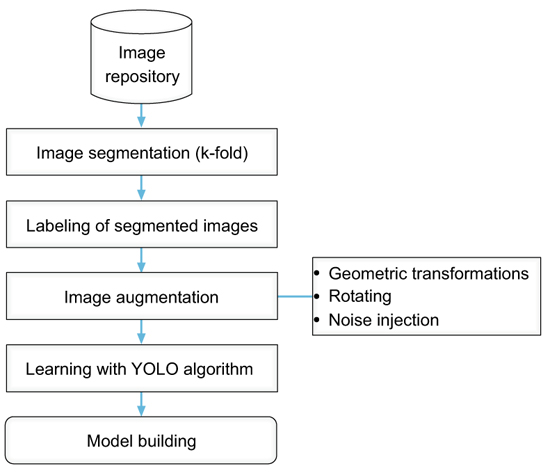

The procedure for constructing the YOLO model is presented in Fig. (3). The raw data gathered on the road amounted to 2,126 images and these were augmented for building a reliable YOLO model to 6,378 images using widely recognized augmentation techniques, such as geometric transformation, rotation, and noise injection [16]. Individual images obtained from camera 1 (Fig. 4) were segmented by boxing on the basis of the window, the B pillar which divides the first and second rows of seats, and the passenger face, and then each of the divided segments was labeled for the corresponding object detection. Images from camera 3, which only targets the last row of seats, were only segmented based on the occupant's face. The ratio of training and test images was 8:2 and the accuracy of the trained YOLO model with Intersection over Union (IOU) of 50% was approximately 95% (Fig. 5).

2.2. System Components

The developed system is broadly categorized into hardware and software. The hardware, as photographed in Fig. (5), comprises three high-speed (300 frames per second) video infrared cameras, five infrared illuminations, and two lasers. Of the three cameras, one is for recognizing the number plates of violating vehicles and the remaining two are for counting the occupants in the compartment. The infrared illuminations, with the same frequency as the cameras, are also installed near the corresponding cameras. For compartment detection, two illuminations for each camera were used for maintaining the occupant detection capabilities regardless of tinted windows or the intensity of illumination. For triggering the image capture for occupant detection, two laser sensors are employed. The two lasers can also measure the speed of passing vehicles to investigate if the occupant detection capability varies according to the vehicle speed.

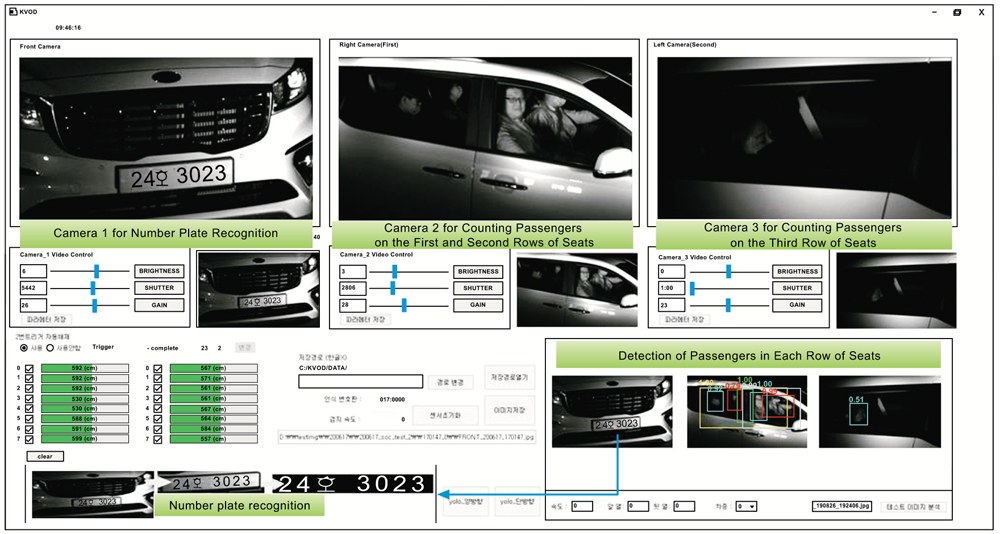

The software, as shown in Fig. (6), captures images from the three cameras as described above. It can automatically adjust the brightness, shutter speed, and aperture of each camera according to the external illumination. The detection results are presented in the lower-left side of Fig. (6). For number plate recognition, an open software source for Korean vehicle registration numbers, easily obtained on the Internet, is used, showing a satisfactory outcome of more than 99% accuracy. Using the YOLOv3-based deep learning model, occupant detection is performed. For reliable occupant detection in the first and second rows of seats, the B pillar and window areas are also detected and boxed, whereas, for simplicity, only the occupants of the last row of seats are detected. To transmit the results of the object detection to the police server system (Fig. 2), the TCP/IP protocol suited for instant message transfer, a requirement for the HOV lane enforcement system, was employed.

3. RESULTS

3.1. Data Collection

To evaluate the system, including the hardware and software described above, field data using two kinds of recreational vehicles that occupy around 80% of HOVs in Korea were obtained on a proving ground where arbitrary speed can be maintained. The data were collected in the day- and night-time on November 25th, 2020, as photographed in Fig. (7). The vehicles in the experiment, with one to six passengers, were driven at speeds of 20, 40, 60, 80, and 100km/h, respectively. There was no lane changing and straddling maneuvers while driving that might cause errors in detection. The images for evaluation tallied up to 245.

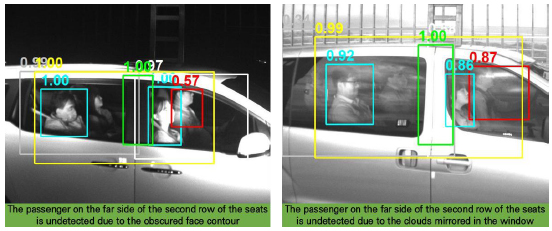

3.2. Numerical Analysis

The confusion matrix according to the number of passengers is presented in Table 1. All of the cases undercount the occupants: the error for six passengers is the highest and the lowest is for one passenger. Specific detection errors by speed and occupants are shown in Table 2 and three-dimensionally plotted in Fig. (8). The average error was 9%, and the highest error of 80% was recorded when vehicles with six passengers were driven at 40km/h. No error was observed for most of the cases. To investigate whether any notable differences exist according to the speed and number of passengers, an analysis of variance (ANOVA) was performed. The result, shown in Table 3, showed that no statistically significant differences were revealed by the ANOVA tests at a 0.05 significance level, as the P-values (0.70 for the speed; 0.33 for the number of passengers) are higher than the significant level. The errors did not vary statistically by illumination. Typical cases of detection error are captured in Fig. (9). The developed system mostly undercounts occupants when face contours are obscured by hair or not clearly visible due to mirrored clouds.

| Detection Results | |||||||

|---|---|---|---|---|---|---|---|

| 1P | 2P | 3P | 4P | 5P | 6P | ||

| Images | 1P | 50 | 0 | 0 | 0 | 0 | 0 |

| 2P | 4 | 46 | 0 | 0 | 0 | 0 | |

| 3P | 0 | 5 | 45 | 0 | 0 | 0 | |

| 4P | 0 | 0 | 4 | 41 | 0 | 0 | |

| 5P | 0 | 0 | 0 | 1 | 24 | 0 | |

| 6P | 0 | 0 | 0 | 0 | 6 | 19 | |

| Speed (km/h) | |||||||

|---|---|---|---|---|---|---|---|

| 20 | 40 | 60 | 80 | 100 | Avg | ||

| Passengers | 1P | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.00 |

| 2P | 0.0 | 0.0 | 0.1 | 0.1 | 0.1 | 0.06 | |

| 3P | 0.0 | 0.0 | 0.0 | 0.1 | 0.4 | 0.10 | |

| 4P | 0.0 | 0.0 | 0.0 | 0.3 | 0.2 | 0.10 | |

| 5P | 0.0 | 0.2 | 0.0 | 0.0 | 0.0 | 0.04 | |

| 6P | 0.2 | 0.8 | 0.2 | 0.0 | 0.0 | 0.24 | |

| Avg | 0.03 | 0.17 | 0.05 | 0.08 | 0.12 | 0.09 | |

| Data | Factor | Deg. of Freedom | Mean Square | F Statistic | P-value |

|---|---|---|---|---|---|

| Speed | Error | 4 | 0.017 | 0.55 | 0.70 |

| Residual | 25 | 0.031 | - | - | |

| Number of passengers | Error | 5 | 0.034 | 1.21 | 0.33 |

| Residual | 24 | 0.028 | - | - |

Although no notable difference was identified from the ANOVA test, errors for six passengers showed higher than the other cases. To improve the performance for six passengers, more images under diverse conditions probably need to be obtained for training the YOLO model. Actually, the growing number of passengers requires higher detection capability. However, the images used for training the model were almost equally distributed from one to six passengers.

CONCLUSION AND FUTURE STUDIES

Manual HOV lane enforcement by the police can cause safety problems and induce unnecessary delays while pulling the violator over to the roadside. To resolve these problems, an automatic HOV lane enforcement system is developed and evaluated in this study. The developed system, comprising three infrared cameras, multiple infrared illuminations with the same frequency as the cameras, and two laser sensors, takes screenshots of the compartment and number plate of a passing vehicle, followed by detecting and recognizing the number of passengers and registration number, respectively. A YOLOv3-based deep learning model was built to detect occupants and an open source was exploited for number plate recognition.

For a performance test on the system, two dominant HOVs in Korea with one to six passengers were driven on a proving ground at different speeds during day- and night-time. As a result of the evaluation with 245 runs, an overall error of 9% was shown. The errors did not show any statistically notable differences regarding the vehicle speed and number of occupants, indicating that the usability of the invented system can be enhanced. Some errors were observed when the human face contour was obscured by hair or clouds were reflected in the windows, implying that further enhancement is needed with more real-world data under diverse conditions. Nevertheless, in light of the prototype that this study aimed at, the overall accuracy of 95% was an encouraging outcome. With the developed system, more efficient and safer HOV lane enforcement is possible.

CONSENT FOR PUBLICATION

Not applicable.

AVAILABILITY OF DATA AND MATERIALS

Not applicable.

FUNDING

This work was supported by a grant (No. 20200432-001) from the Korea Institute of Civil Engineering and Building Technology (KICT).

CONFLICT OF INTEREST

The author declares no conflict of interest, financial or otherwise.

ACKNOWLEDGMENTS

Declared none.